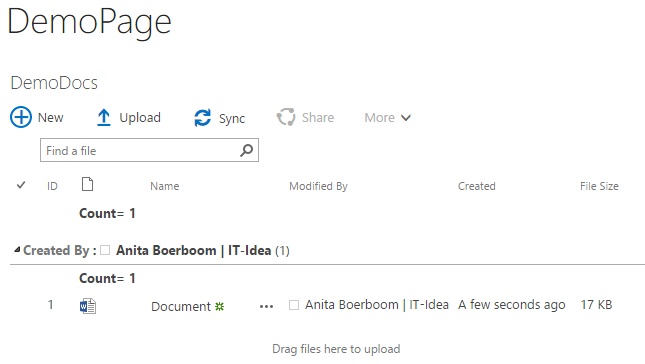

In one of my recent projects we faced the issue of showing documents and links together using a Content Search Webpart. The results had to be sorted alphabetically on the Title property.

Nothing fancy, so you think, I thought so too…

The result was that the Content Search Webpart first displayed all the links sorted alphabetically and then displayed all the documents sorted alphabetically.

After some research it seemed the title of the link had a leading space. Sorting will follow [0-9A-Za-z] so items with a leading space will appear before any letters.

A link consists of a url and a description part and is stored as ‘url, description’ (SPFieldUrlValue). So the separator between the url and the description seems a comma and a space.

Appearently the Search Crawler is separating only on the comma, which leaves a leading space.

To fix this issue I had to create a custom content enrichment service. A custom service gives me the ability to modify the managed properties of the crawled items before they are added to the search index. The image below shows a part of the proces that takes place in the content processing component, published on MSDN.

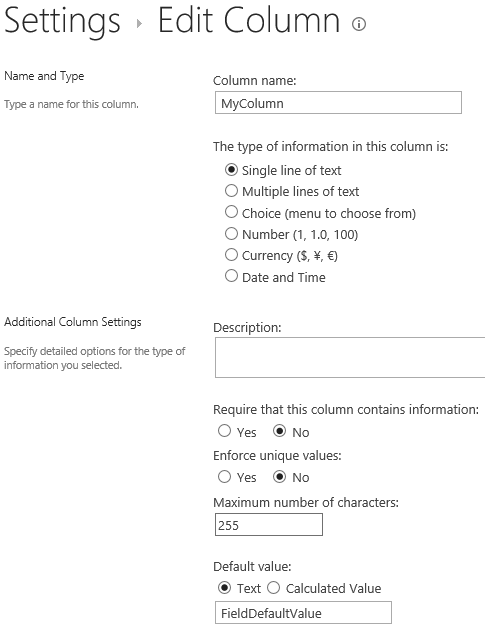

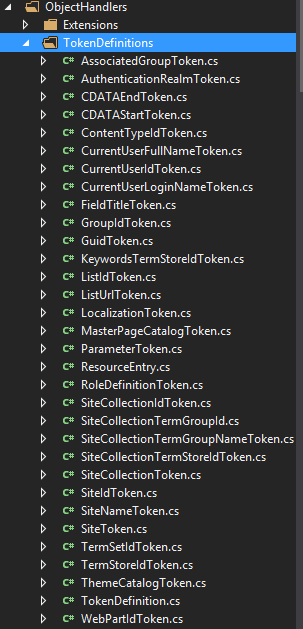

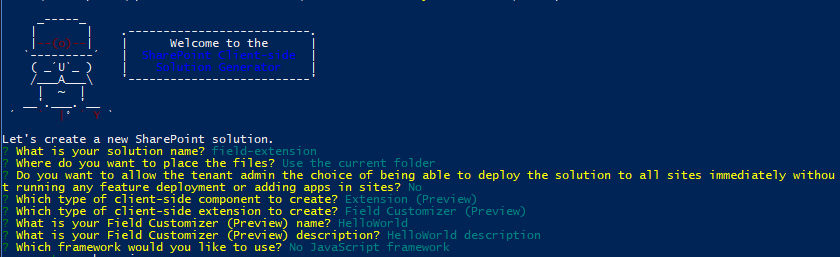

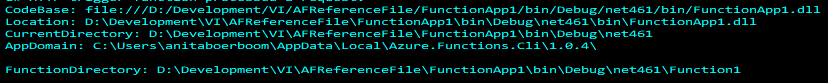

Creating the service itself is not a real challenge, follow these steps:

- Create a WCF Service Application in Visual Studio

- Add a reference to Microsoft.Office.Server.Search.ContentProcessingEnrichment.dll (\Program Files\Microsoft office servers\15\Search\Application\External)

- Delete IService1.cs

- Inherit IContentProcessingEnrichmentService in the Service1.svc.cs to accept requests from the content processing component.

- Delete the existing code in the class and implement the method ProcessItem. This is the method where you get the required properties for each item.

// Defines the name of the managed property 'Title'

private const string TitleProperty = "Title";

// Defines the error code for managed properties with an unexpected type.

private const int UnexpectedType = 1;

// Defines the error code for encountering unexpected exceptions.

private const int UnexpectedError = 2;

private readonly ProcessedItem processedItemHolder = new ProcessedItem

{

ItemProperties = new List<AbstractProperty>()

};

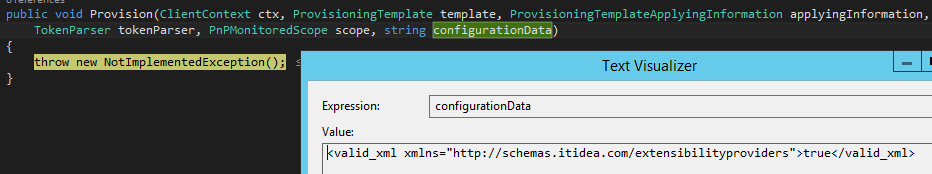

public ProcessedItem ProcessItem(Item item)

{

processedItemHolder.ErrorCode = 0;

processedItemHolder.ItemProperties.Clear();

try

{

// Iterate over each property received and locate the property we configured the system to send.

foreach (var property in item.ItemProperties)

{

if (property.Name.Equals(TitleProperty, StringComparison.Ordinal))

{

var title = property as Property<string>;

if (title == null)

{

// The title property was not of the expected type.

// Update the error code and return.

// Errors can be found in ULS, filter on

// message contains Microsoft.Ceres.Evaluation.DataModel.EvaluationException

processedItemHolder.ErrorCode = UnexpectedType;

return processedItemHolder;

}

if (title.Value.StartsWith(" "))

{

title.Value = title.Value.TrimStart();

processedItemHolder.ItemProperties.Add(title);

}

}

}

}

catch (Exception)

{

processedItemHolder.ErrorCode = UnexpectedError;

}

return processedItemHolder;

}

The item.ItemProperties contain all the input properties received by the service. These input properties will be configured by PowerShell lateron.

The code checks if the Title property starts with a space, trims it when appropriate and returns the changed value to the content processing component.

When exceptions are thrown these will be thrown by ‘ContentProcessingEnrichmentClientEvaluator’ and are of type ‘Microsoft.Ceres.Evaluation.DataModel.EvaluationException’. Use this to monitor the ULS.

- Add following in <system.servicemodel> in the web.config file

<bindings>

<basicHttpBinding>

<binding maxReceivedMessageSize = "8388608">

<readerQuotas maxDepth="32"

maxStringContentLength="2147483647"

maxArrayLength="2147483647"

maxBytesPerRead="2147483647"

maxNameTableCharCount="2147483647" />

<security mode="None" />

</binding>

</basicHttpBinding>

</bindings>

- Host the service in IIS by creating a virtual directory and map it to the physical path of the WCF service application. Convert it to an application by right clicking and selecting ‘Convert to Application’

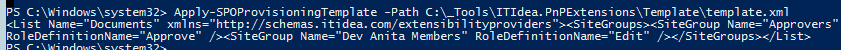

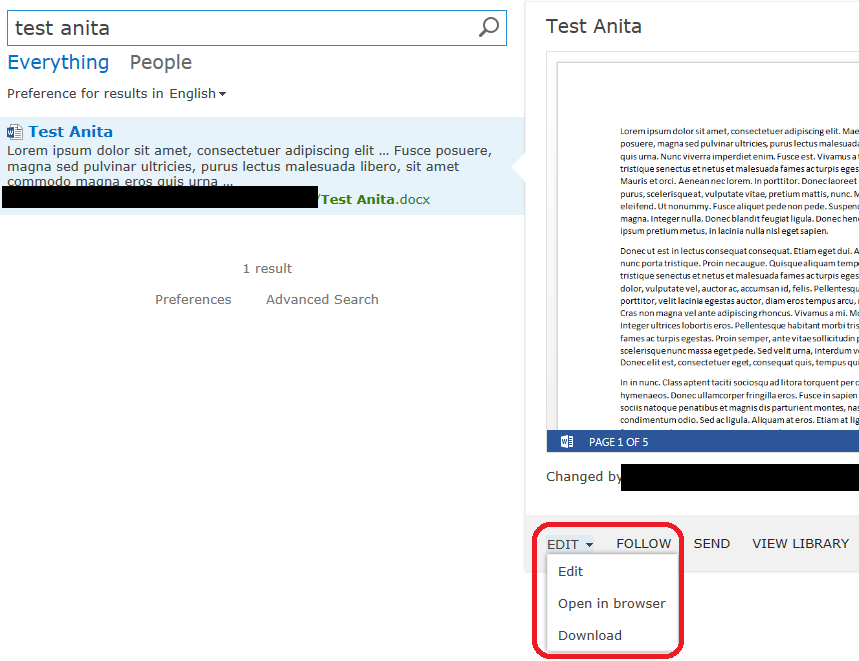

- Configure the Search Service Application to use the Content Enrichment service by using PowerShell:

$ssa = Get-SPEnterpriseSearchServiceApplication $config = New-SPEnterpriseSearchContentEnrichmentConfiguration $config.Endpoint = "http://localhost:8081/Service1.svc" #url of the service $config.InputProperties = "Title" $config.OutputProperties = "Title" $config.Trigger = 'StartsWith(Title, " ")' $config.FailureMode = "Warning" $config.SendRawData = $True $config.MaxRawDataSize = 8192 Set-SPEnterpriseSearchContentEnrichmentConfiguration –SearchApplication $ssa –ContentEnrichmentConfiguration $config #Get-SPEnterpriseSearchContentEnrichmentConfiguration -SearchApplication $ssa #Remove-SPEnterpriseSearchContentEnrichmentConfiguration –SearchApplication $ssa

It is important to set a meaningful tigger, because calls to the service are synchronous which means the search engine blocks until the item is processed or a timeout occurs. This happens on every single item that is passed to the Content Enrichment Service by its trigger.

- Run a full crawl

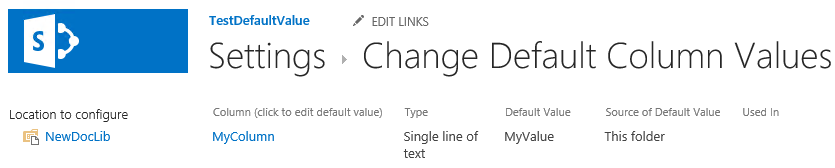

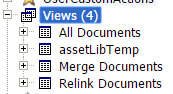

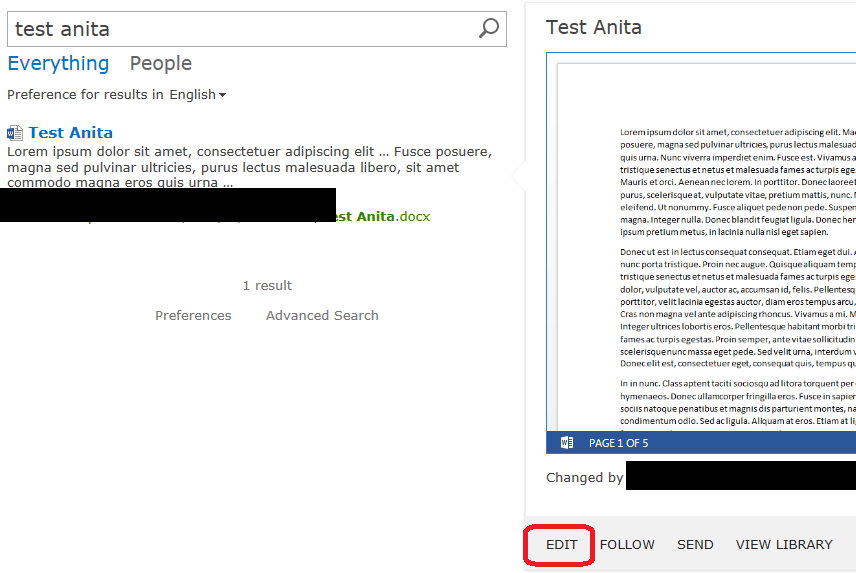

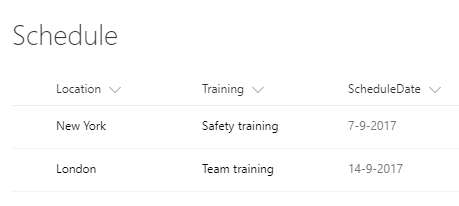

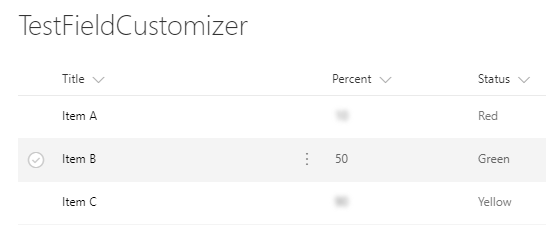

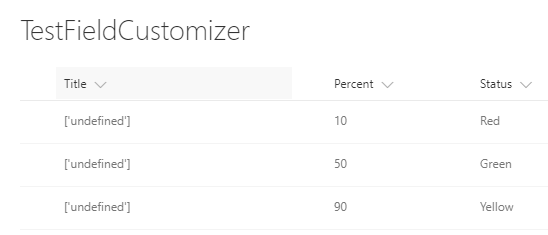

Now documents and links are truly sorted alphabetically:

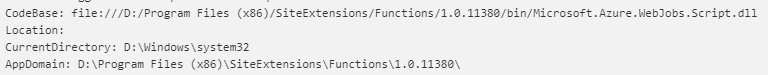

Do not deploy the service in a SharePoint webapplication

Sharepoint webapplications are authenticated resources, while the Content Processing pipeline invokes the Content Enrichment web service by anonymous service calls. So deploy the Content Enrichment web service in an anonymous IIS website. Otherwise the exceptions ‘Failed to send the item to the content processing enrichment service.’ and ‘The remote server returned an error: (401) Unauthorized.’ can be found in the ULS.

Summary

Creating a custom Content Enrichment Service isn’t that hard, but I find it disappointing I had to do so for the particular problem we faced:

Sorting links and documents based on title and showing them alltogether in one Content Search webpart.

Update 07/11/2014

Since upgrading from March CU 2013 to SP1 and September 2014 CU updating the Title managed property suddenly stopped working. Somewhere in a CU Microsoft changed the behavior of updating some system managed properties without communicating about it. You can read more about it over here.

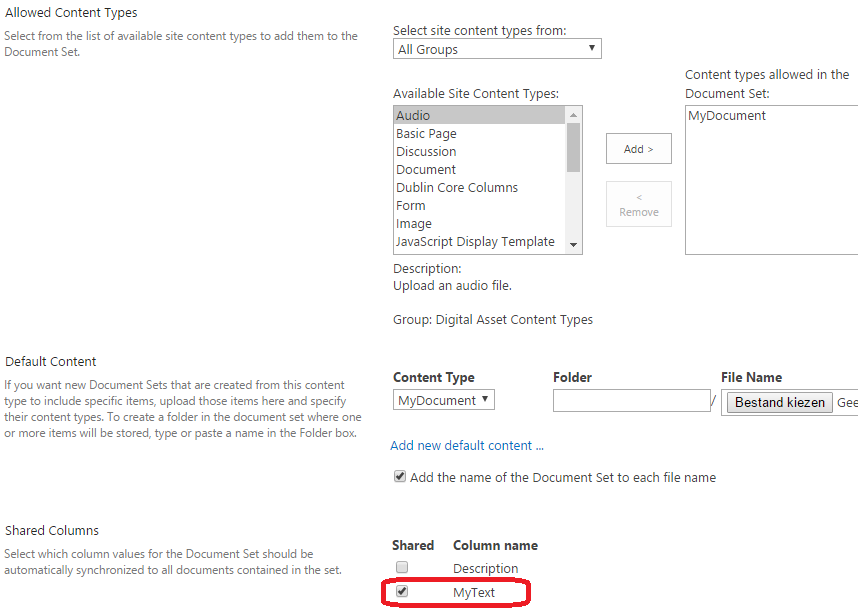

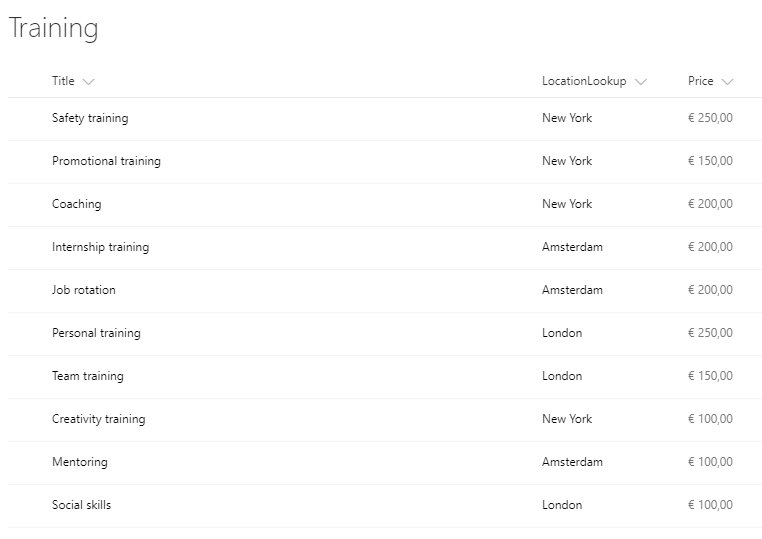

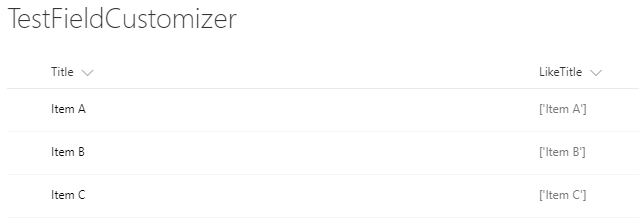

The solution is to create your own managed property, copy the settings from the Title managed property and sort by this one…