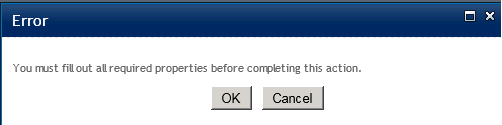

In one of my posts I wrote a solution for the nasty popup error message:

![MMDRequiredFieldErrorMessage]()

This post provides some background information about this issue.

The ootb ribbon with its functionality is built out of the ribbon definition xml from the cmdui.xml file, script from the SP.Ribbon.js file and server side code from different assemblies. In this case we’re interested in the assemblies Microsoft.SharePoint and Microsoft.SharePoint.Publishing (in the above case publishing is enabled).

SharePoint loads a webcontrol SPPageStateControl at every wiki or publishing page. This control handles the ribbon buttons controlling the state of these pages:

this.commandHandlers[3] = new EditCommandHandler(this);

this.commandHandlers[0] = new SaveCommandHandler(this);

this.commandHandlers[1] = new SaveBeforeNavigateHandler(this);

this.commandHandlers[4] = new DontSaveAndStopCommandHandler(this);

this.commandHandlers[2] = new SaveAndStopEditCommandHandler(this);

this.commandHandlers[5] = new CheckinCommandHandler(this);

this.commandHandlers[6] = new CheckoutCommandHandler(this);

this.commandHandlers[7] = new OverrideCheckoutCommandHandler(this);

this.commandHandlers[8] = new DiscardCheckoutCommandHandler(this);

this.commandHandlers[11] = new PublishCommandHandler(this);

this.commandHandlers[12] = new UnpublishCommandHandler(this);

this.commandHandlers[9] = new SubmitForApprovalCommandHandler(this);

this.commandHandlers[10] = new CancelApprovalCommandHandler(this);

this.commandHandlers[13] = new ApproveCommandHandler(this);

this.commandHandlers[14] = new RejectCommandHandler(this);

this.commandHandlers[15] = new DeleteCommandHandler(this);

this.commandHandlers[0x10] = new UpdatePageStateCommandHandler(this);

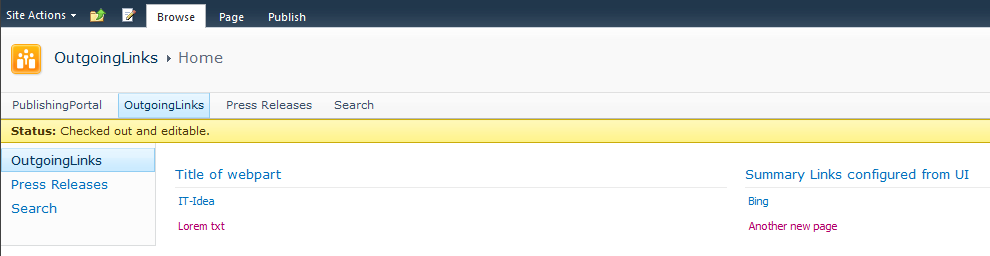

The state of a page can be the page is

- in display or edit mode

- checked out to the current user, another user or to the system user

- checked in

- scheduled

- rejected

- pending approval

- published

- draft

- not valid

- and more

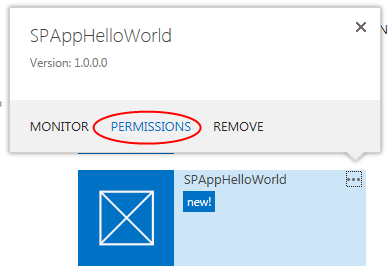

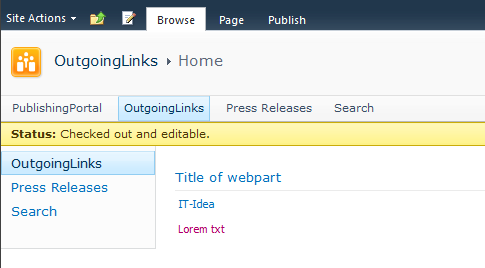

Based on these states

status messages can be displayed (like ‘Checked out and editable’, ‘Checked in and viewable by authorized users’ or a number of others).

error messages can be displayed (like ‘This page contains content or formatting that is not valid. You can find more information in the affected sections.’)

ribbon buttons can be trimmed

initial tab can be set

With this little background information about the SPPageStateControl the difference in validating required fields when saving or publishing a page directly can be investigated.

Save & Close

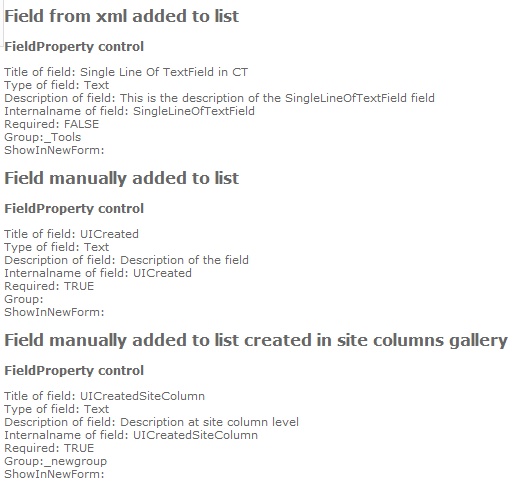

The command which handles the Save & Close button is the SaveAndStopEditCommandHandler. When this handler fires the page is validated by calling this.Page.Validate(). When one of the required fields on the page is left empty the page isn’t valid and an error condition is set and a status message is added with a message from a resource file.

The OnPreRender method of the SPStateControl populates the status messages based on the state of the page, in this case ‘Checked out and editable’ and ‘This page contains content or formatting that is not valid. You can find more information in the affected sections.’. These status messages and the error message are serialized and written to the page by script.

A serialized status message contains a StatusBody(text of the message), StatusTitle(‘Error:’ or ‘Status:’) and StatusPriority(‘yellow’).

A serialized error message consists of a Message, Title, ButtonCount, for each button a ButtonText and a ButtonCommand.

Client side the status and error messages are shown at initialization:

if (SP.Ribbon.PageState.NativeErrorState.ButtonCount > 0 || !SP.Ribbon.SU.$2(SP.Ribbon.PageState.NativeErrorState.ShowErrorDialogScript)) {

SP.Ribbon.PageState.PageStateHandler.showErrorDialog();

}

SP.Ribbon.PageState.PageStateHandler.showPageStatus();

The error message, this is the popup!, will be shown when some of the properties like the ButtonCount and ShowErrorDialogScript are filled.

The Save & Close button added an error message with the following statement:

this.SetErrorCondition(msg, 0, null, null);

The SetErrorCondition is implemented as:

public void SetErrorCondition(string ErrorMessage, uint RemedialActionCount, string[] RemedialActionButtonText, string[] RemedialActionCommand)

{

this.errorTitle = SPResource.GetString("PageStateErrorTitle", new object[0]);

this.errorMessage = ErrorMessage;

this.remedialActionCount = RemedialActionCount;

this.remedialActionButtonText = RemedialActionButtonText;

this.remedialActionCommand = RemedialActionCommand;

}

This means the error message was added with only an error message, while the other properties were left empty. This is why the popup doesn’t show and only the status messages are.

Publish as first ‘save’action

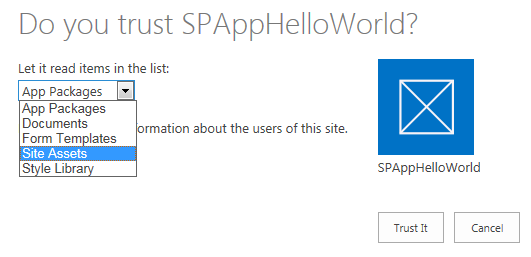

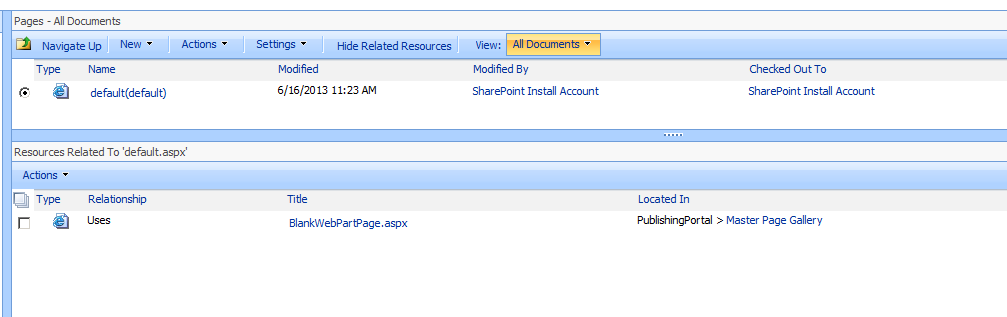

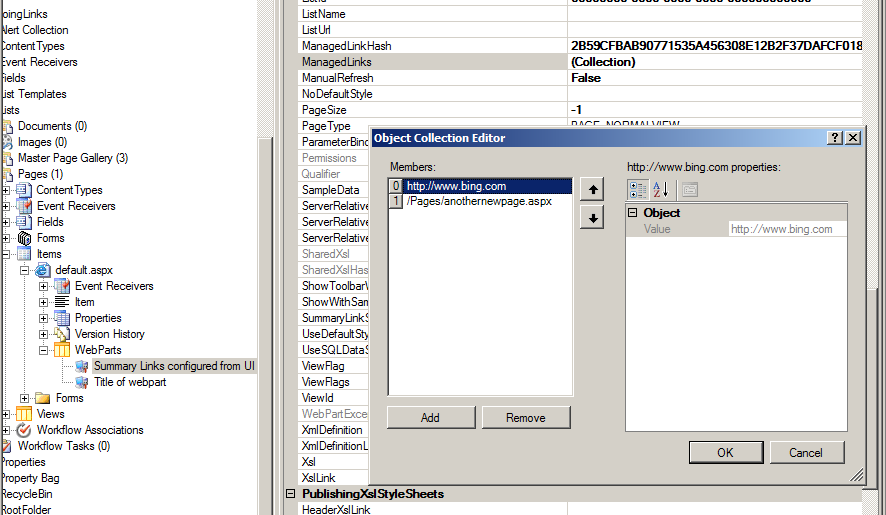

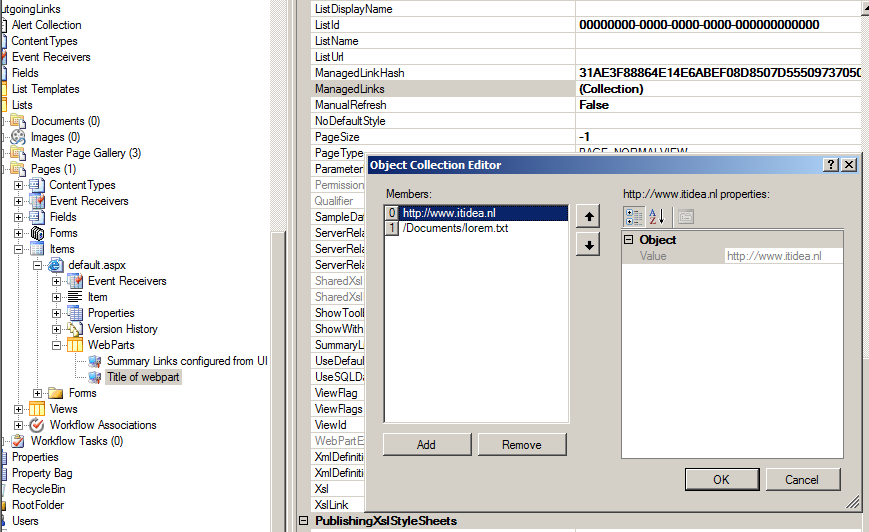

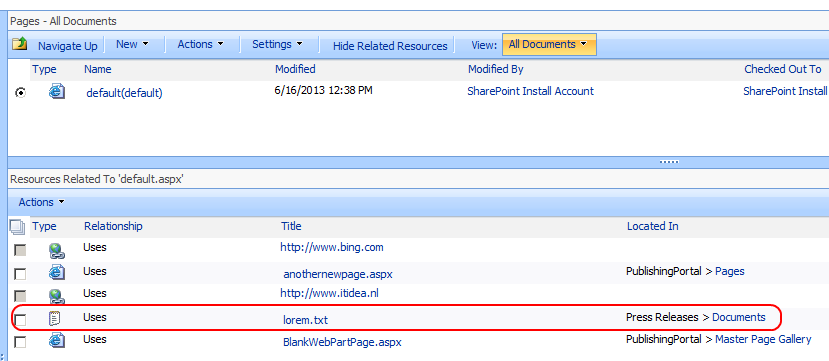

The command which handles the Publish button is the PublishingPagePublishHandler. When this handler fires the separate required fields on the listitem are checked if they all have values. This differs from Save & Close which validates the page immediately by calling this.Page.Validate() without the explicit check for missing required fields.

The check for missing required fields loops through the FieldLinks of the ContentType to see if anything required is missing. If so, it builds up the edit properties url of the listitem to set as ButtonCommand on the errormessage it will format.

The call to SetErrorCondition differs from the call at Save & Close, all properties are now filled:

SetErrorCondition(Resources.GetString("MissingRequiredFieldsErrorMessage"), 2, new string[] { SPResource.GetString("PageStateOkButton", new object[0]), SPResource.GetString("ButtonTextCancel", new object[0]) }, new string[] { builder.ToString(), "SP.Ribbon.PageState.PageStateHandler.dismissErrorDialog();" });

The error message consists of the Message from resource file, Title from resource file, ButtonCount of 2 and for each button a ButtonText from resource: ‘OK’ and ‘Cancel’ and the ButtonCommands: builder.ToString() in this case ‘SP.Utilities.HttpUtility.navigateTo(‘/Pages/Forms/EditForm.aspx?ID=4&Source=%2FPages%2Ftest01%2Easpx’);’ for the ‘OK’ button and ‘SP.Ribbon.PageState.PageStateHandler.dismissErrorDialog();’ for the ‘Cancel’ button.

The OnPreRender method of the SPStateControl populates the serialized status and error messages based on the state of the page and at the end of the method script is added to the page, the same as at Save & Close.

SerializedPageStatusMessages method (serverside) populates ‘SP.Ribbon.PageState.ImportedNativeData.StatusBody and StatusTitle used in showPageStatus (client side)

SerializedErrorState (server side) populates PageErrorState (client side)

PageErrorState var (client side) is set to this.SerializedErrorState which builds an object which client script can handle (Message, Title, ButtonCount, ButtonText, ButtonCommand)

And SP.Ribbon.PageState.NativeErrorState is set to the PageErrorState.

Client side the status and error messages are shown at initialization:

if (SP.Ribbon.PageState.NativeErrorState.ButtonCount > 0 || !SP.Ribbon.SU.$2(SP.Ribbon.PageState.NativeErrorState.ShowErrorDialogScript)) {

SP.Ribbon.PageState.PageStateHandler.showErrorDialog();

}

SP.Ribbon.PageState.PageStateHandler.showPageStatus();

Where the ButtonCount is now 2

![MMDRequiredFieldNativeErrorState]()

and the error dialog will be shown as a modal dialog:

![MMDRequiredFieldErrorMessage]()

Once the page has been saved with the required managed metadata field filled

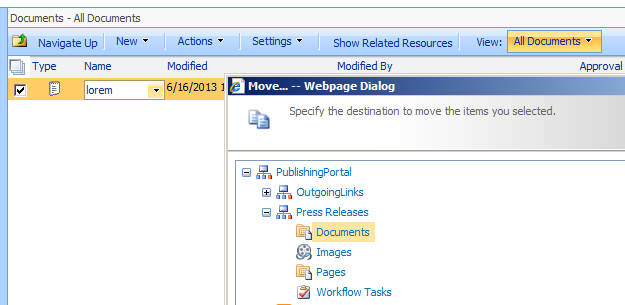

When the page is saved with the required managed metadata field filled, set in edit mode again, empty the field and Publish the page, the result is different from the above: no popup error message is shown, all messages appear as status messages.

The code passes successfully the check for missing required field, because it still has the previous filled in value(!), then falls back on validating the page as with Save & Close. This one fails and the error message is added as a status message and the process continues as with Save & Close.

The popup error message is confusing for a lot of users, they don’t understand why this sometimes happens.

They read the message and press ‘Ok’ and they are navigated away from the page to the edit properties and then what. The field was on the page, why am I now on another page?

The solution can be found here.

Summary

With a few lines of code the nasty popup error message can be prevented to be displayed. The background story behind it is quite large, but important to understand. Be careful to never overwrite SharePoint message when publishing stuff, because it can lead to inappropriate behavior.